What does it mean to integrate EVE with Kubernetes? This does not refer to running Kubernetes on top of EVE (k3s solutions already exist for this), but rather how do we integrate EVE the platform with Kubernetes at the control plane level?

To explore this further, we need to outline in a brief and limited fashion what each product - EVE and Kubernetes - does.

Kubernetes handles:

The above occurs primarily via the API and controllers, which implement control loops over changed data sets.

Kubernetes does not handle operating system deployment and software installation. A node begins its “Kubernetes life” when it already has an operating system and relevant Kubernetes software (container runtime, kubelet, kube-proxy) installed, and it performs communication and registration with the API server. The primary node-side agent between the Kubernetes control plane and the local node is the kubelet.

EVE varies from Kubernetes in several ways, beyond the actual API implementation itself.

Summarizing the differences between EVE and Kubernetes:

There are several ways to consider EVE Kubernetes integration. Each of these needs to be explored for usefulness and feasibility. Each also needs review to ensure that it actually covers all of the EVE use cases.

At a high-level, there are only two communications channels: EVE device to EVE controller; user to EVE controller. Thus the possible points of integration are:

There are several variants of each.

Finally, it may be possible to combine them, where the controller exposes a Kubernetes-compatible API, and uses native Kubernetes to control EVE itself.

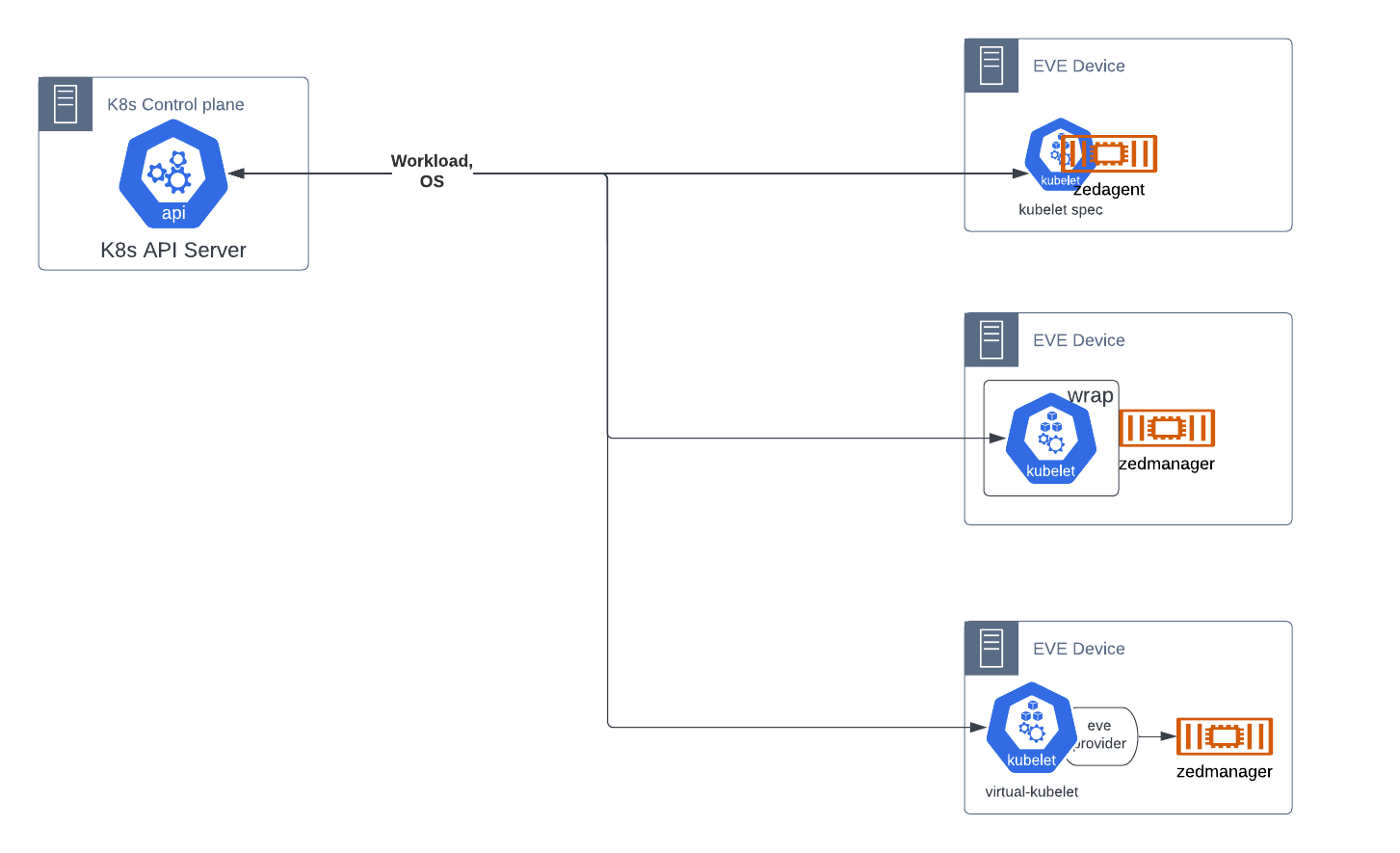

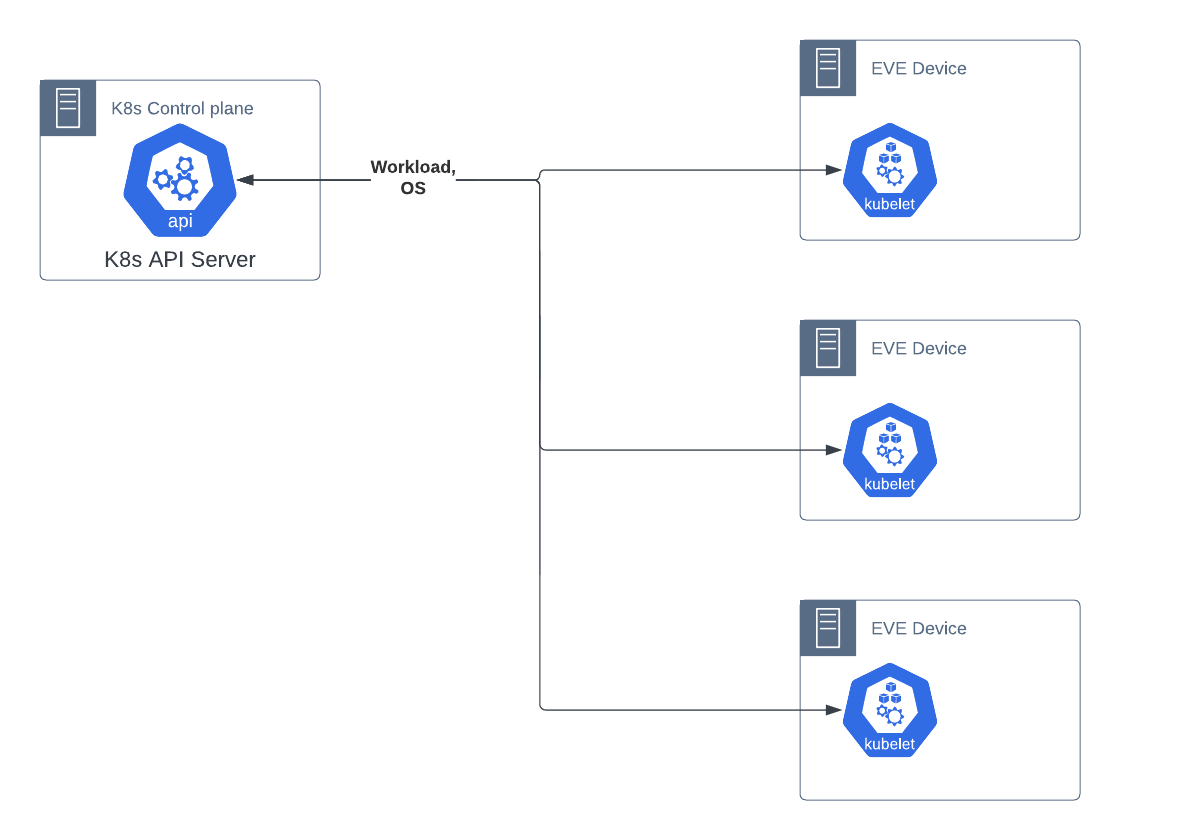

An EVE device is expected to receive workload assignment from a standard native Kubernetes cluster.

This can be implemented in several ways:

The functionality that is not available within Kubernetes, as listed above, is provided in one of two ways, either interfacing solely with Kubernetes, or with both Kubernetes and EVE controller.

All functionality is received from Kubernetes. The necessary additional OS management parts of the EVE API are provided by utilizing existing capabilities in the kubelet-apiserver API, and other native Kubernetes artifacts. This assumes that such is possible; TBD.

Typical flow is:

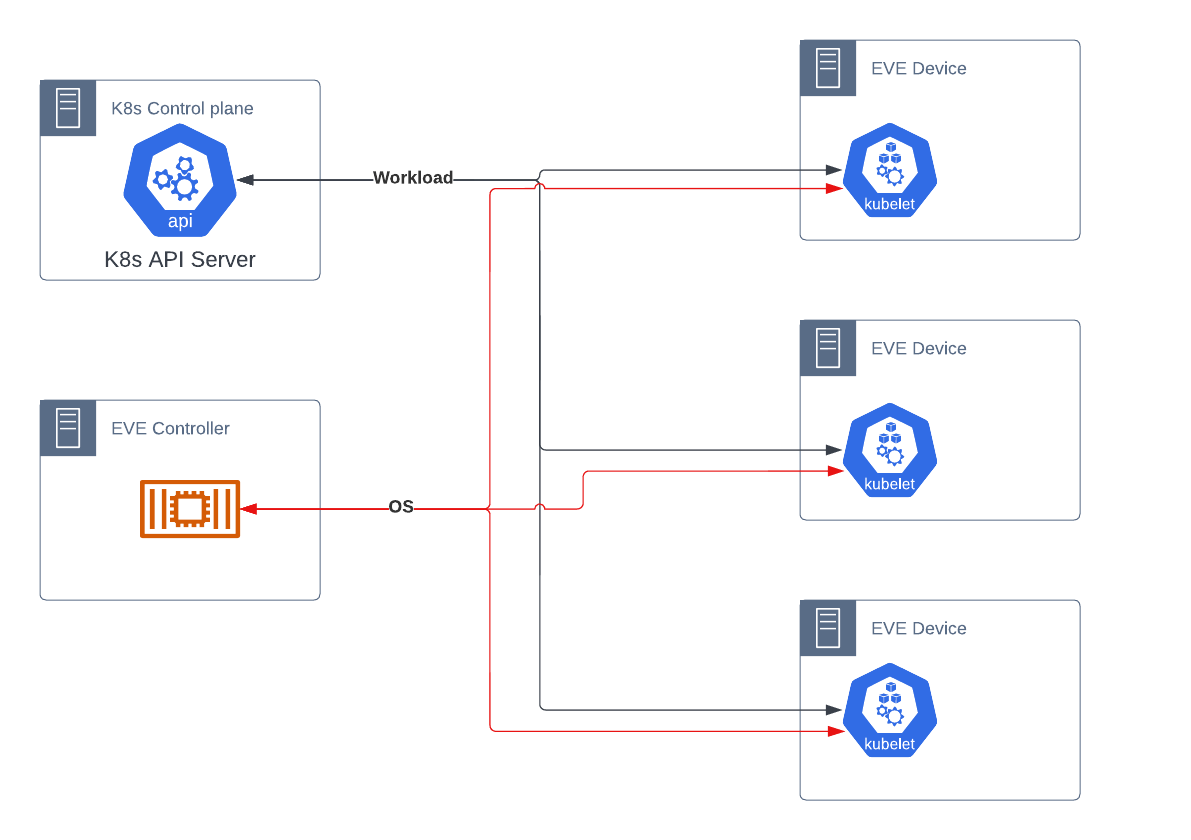

Functionality that fits within Kubernetes’ native space, specifically workload scheduling, is provided by Kubernetes. Functionality that is beyond the scope of Kubernetes, specifically management and security of the operating system, is provided by a distinct EVE controller, as today.

Typical flow is:

This method requires each EVE device to maintain active communications to two distinct controllers simultaneously. This may be simplified in one of the EVE Controller cases.

While Kubernetes itself provides only two network interfaces per pod - one loopback and one primary - it does not prevent a pod from having more network interfaces.

When Kubernetes launches a pod, the kubelet calls a local binary on the host, a CNI plugin, to: create the network interface (normally eth0), attach it to a network, and assign an IP. This interface is expected to provide communication to all other pods in a flat manner, services and the off-cluster network.

The CNI plugin, however, can do whatever it wants with the pod, provided it meets the minimum defined requirements of Kubernetes and the CNI specification. Thus, a CNI plugin could assign the normal default network that Kubernetes expects, and then 3 more interfaces connected to different networks. Further, a plugin could act as an intelligent multiplexer, calling the default plugin and then one or more different ones, each of which assigns different interfaces.

To provide support for these capabilities, the Kubernetes Network Plumbing Working Group has been defining standards for pods to declare their need for additional networks without breaking the existing Kubernetes API. They do so by:

The specification for multiple networks is available here. A reference implementation of a multiple network CNI plugin, multus, is available here.

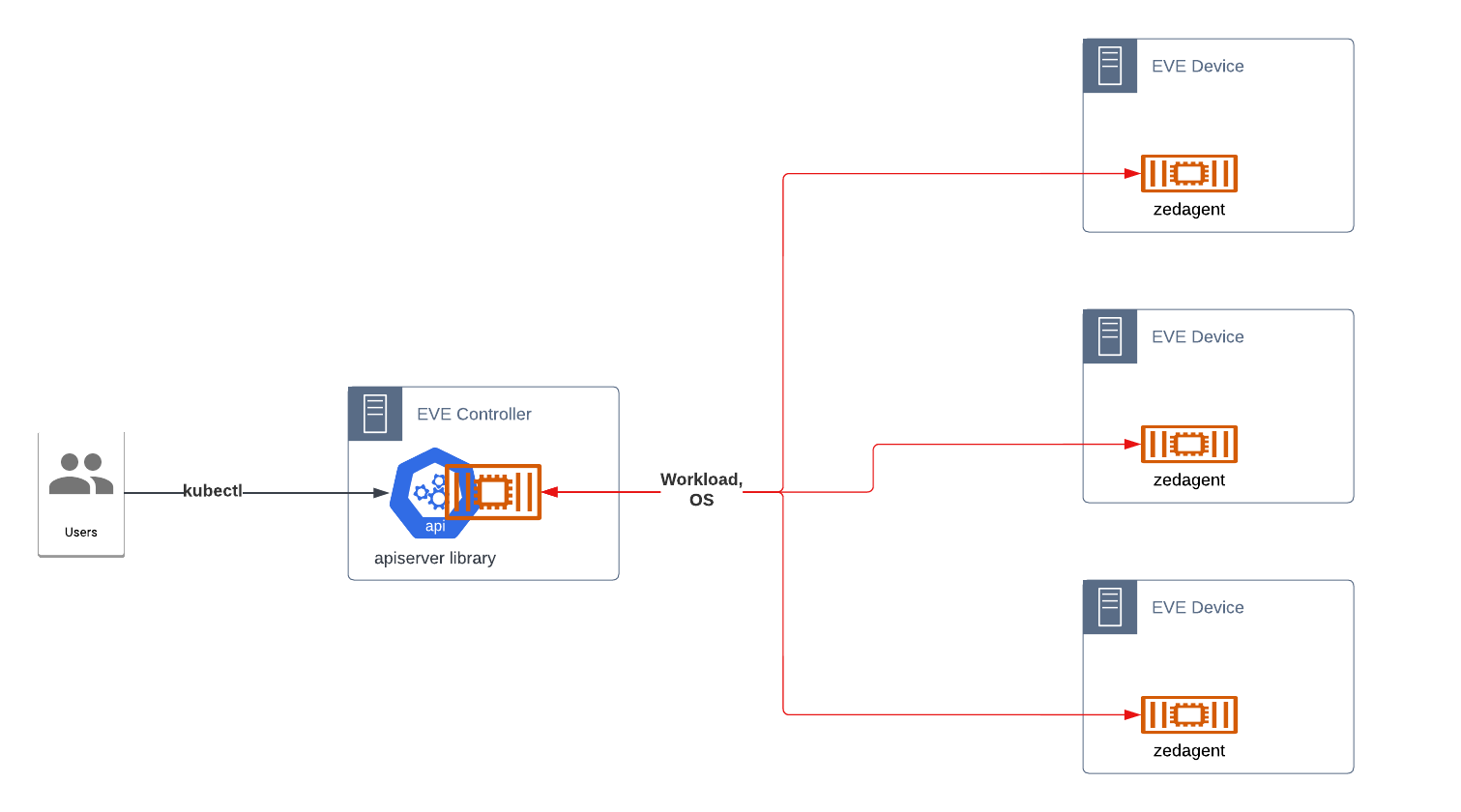

We keep the existing EVE device structure and EVE-controller API. Instead, the controller API is replaced with a Kubernetes-compatible API. This API is not native Kubernetes, but instead uses Custom Resource Definitions (CRDs) to create appropriate resources for managing EVE devices, both workloads and operating systems.

The primary goal would be standardization on a Kubernetes-compatible API that could be used across controllers.This would greatly improve adam, simplify eden, and provide better integrations with controllers.

EVE might or might not be a kubelet in this scenario; these are agnostic as to how the controller communicates with EVE.

The API is to be defined and published as part of lfedge, as an optional basis for EVE controllers, but would be extensible so that commercial controllers can provide additional capabilities.

There are two implementation variants:

Note that it is not strictly necessary to implement anything at all. We could define APIs that would work as a standard. However, lack of a proven reference implementation would weaken adoption.

Adam, the current OSS controller, is a single process which exposes a minimal REST API. The design would extend Adam to expose a Kubernetes-compatible API to the end-user.

Implementing Kubernetes API directly is complex. A starting point may be https://github.com/kubernetes/sample-apiserver or kcp. Part of kcp is a bundling of multiple apiserver libraries into a single reusable library. However, much of the project has been focused on multiple cluster management.

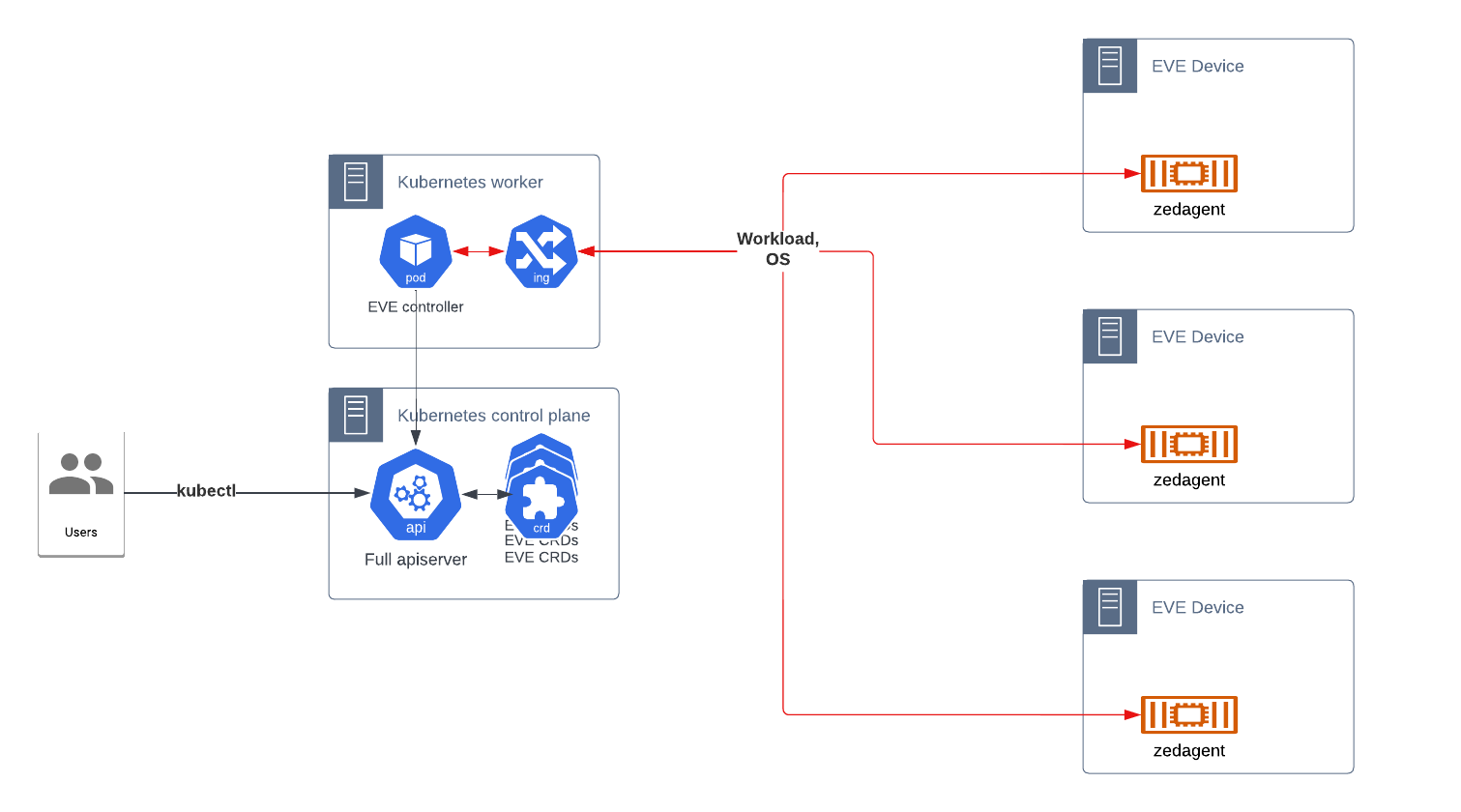

Adam, or a next-generation version, is recreated as a deployment intended to run in Kubernetes. The manifests create the CRDs necessary, while the container implements the control loops for managing those resources, as well as exposing the endpoints to handle the EVE device API.

This is much more straightforward than implementing the actual API as in the previous, but would require running a dedicated Kubernetes cluster. It is possible to scale it down to a single node cluster, if desired.

A possible combination of the above is the EVE Controller deployed into Kubernetes, exposing a Kubernetes-compatible API, with the kubelet API leveraged to provide communications with EVE devices. While more difficult to design, it would simplify the deployment of and integration with controllers in the future.