Introduction

Sometimes, a BaseOs upgrade may fail because of transient error conditions. In general EVE provides for eventual consistency, where it will retry operations after a failure (in case the failure condition has gone away). However, a BaseOS update with associated reboot is quite disruptive and going in a loop repeating this even more so. Hence it makes sense to require some user intervention before a failed BaseOs Upgrade is retried.

Currently, Device Config API doesn't have a retry mechanism. Controller has to first remove the baseos configuration, wait for the device to sync-up, then reconfigure the BaseOs again. This is not very userfriendly.

This document describes the support to retry a failed BaseOs upgrade.

Proposed Solution

Introduce a new command "baseos_upgrade_retry" for devices.

EVE API

diff --git a/api/proto/config/devconfig.proto b/api/proto/config/devconfig.proto

index c58376ab7..7dc9c59e2 100644

--- a/api/proto/config/devconfig.proto

+++ b/api/proto/config/devconfig.proto

@@ -83,6 +83,19 @@ message EdgeDevConfig {

// if we set new epoch, EVE sends all info messages to controller

// it captures when a new controller takes over and needs all the info be resent

int64 controller_epoch = 25;

+

+ // Retry the BaseOs upgrade for the configured image ONLY if the image

+ // upgrade has failed. If the currently configured image is in FAILED state in the other

+ // partition, retry the image upgrade. ELSE - Do nothing. Just update the

+ // baseos_upgrade_retry counter in Info message.

+ DeviceOpsCmd baseos_upgrade_retry = 26;

}

diff --git a/api/proto/info/info.proto b/api/proto/info/info.proto

index 7bead8777..230452ac1 100644

--- a/api/proto/info/info.proto

+++ b/api/proto/info/info.proto

@@ -344,6 +344,13 @@ message ZInfoDevice {

// Are we in the process of rebooting EVE?

bool reboot_inprogress = 41;

+ // BaseOsUpgrade Retry Counter.

+ // if status_baseOs_upgrade_retry_counter != config.baseOs_upgrade_retry_counter &&

+ // configured_version_partition.State == ERROR:

+ // Trigger Upgrade

+ // status_baseOs_upgrade_retry_counter = config.baseOs_upgrade_retry_counter

+ // schedule_info_msg_to_be_sent()

+ uint32 baseOs_upgrade_retry_counter = 42;

}

Note: Even in case of No-Op for upgrade_retry, the device sends an Info message to the controller to update its baseos_upgrade_retry_counter.

EVE Support

- Eve Needs to store the status of each partition ( Success / Failure ) - This is done currently

- If the currently configured image is in FAILED state in the other partition, retry the image upgrade. ( Intended Use Case )

- ELSE Do nothing. Just update the baseos_upgrade_retry counter in the Info message and send an Info message to the Controller.

Note: Currently - Eve automatically retries Download / verify / install errors

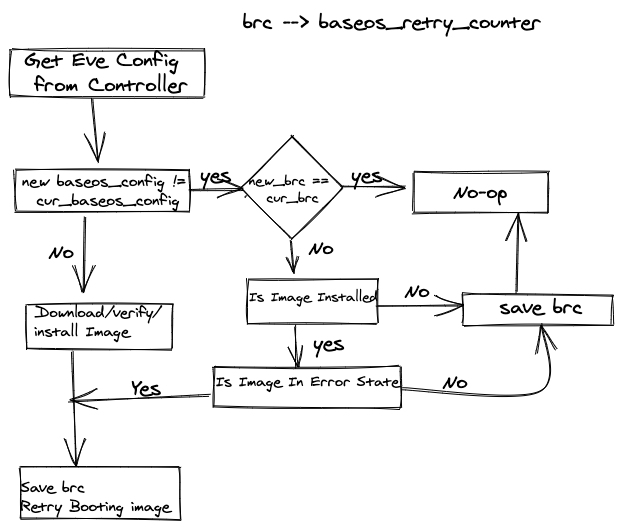

Control Flow:

Some Scenarios for Implementation

Configured EVE image in Error State, Fall Back image Currently Active.

This is the regular scenario for the feature:

- Device Currently running 6.1.0 ( Partition IMGA ) ( baseos_upgrade_retry_counter = 1 )

- Controller configured 6.4.0 as the active image. ( baseos_upgrade_retry_counter = 1 )

- Eve receives new config, downloads and verifies the Image, and starts upgrading to 6.4.0 ( IMGB)

- Even boots up 6.4.0 and enters the Testing Phase.

- Testing for 6.4.0 Fails and Eve Falls back to running 6.1.0

- User triggers retry ( baseos_upgrade_retry_counter = 2 )

- As indicated in Control Flow section, this triggers booting into 6.4.0 again

2. BaseOs Using Volumes

BaseOs config still only has only Active image name. It might download multiple volumes ( images ), but there can only be ONE ACTIVE EVE IMAGE VOLUME.

BaseOs Using Volumes with Current Partition Scheme:

Currently, Eve has the concept of partitions. Currently, it supports two partitions:

- Currently running Image Partition ( Lets say - PART-USED )

- Previous Image booted Partition ( PART-UNUSED )

Any new image installed in PART-UNUSED. It will also carry if that image was successful or errored. This is all is needed to support this feature.

In the case where Controller supports multiple volumes, and hence multiple partitions, each partition needs to maintain the state of the last Image result - SUCCESS / ERROR / UNKNOWN

This is used to answer the question "is_image_in_error_state()"